Hello, I’m rather new to the whole logging stack.

And I need help. The goal is to get traffic flow from mikrotik to my ELK server:

This is my elastiflow/flowcoll.yml:

EF_ACCOUNT_ID: 6881d7a878b9253ef4a85a14

EF_LICENSE_ACCEPTED: true

EF_LICENSE_KEY: “Key”

EF_LOGGER_DEVELOPMENT_ENABLE: “true”

EF_LOGGER_ENCODING: console

EF_LOGGER_FILE_LOG_ENABLE: “true”

EF_LOGGER_FILE_LOG_FILENAME: /var/log/elastiflow/flowcoll/flowcoll.log

EF_LOGGER_LEVEL: debug

EF_FLOW_SERVER_UDP_IP: ELK_IP

EF_FLOW_SERVER_UDP_PORT: 2055,4739,6343,9995

EF_PROCESSOR_DECODE_IPFIX_ENABLE: “true”

EF_PROCESSOR_DECODE_NETFLOW5_ENABLE: “true”

EF_PROCESSOR_DECODE_NETFLOW7_ENABLE: “true”

EF_PROCESSOR_DECODE_NETFLOW9_ENABLE: “true”

EF_PROCESSOR_DURATION_PRECISION: ms

EF_PROCESSOR_KEEP_CPU_TICKS: “true”

EF_PROCESSOR_PERCENT_NORM: 100

EF_PROCESSOR_POOL_SIZE: 0

EF_PROCESSOR_TIMESTAMP_PRECISION: ms

EF_PROCESSOR_TRANSLATE_KEEP_IDS: default

EF_OUTPUT_ELASTICSEARCH_ADDRESSES: 127.0.0.1:9200

EF_OUTPUT_ELASTICSEARCH_ALLOWED_RECORD_TYPES: as_path_hop,flow_option,flow,ifa_hop,telemetry,metric,log

EF_OUTPUT_ELASTICSEARCH_CLIENT_CA_CERT_FILEPATH: ca.crt

EF_OUTPUT_ELASTICSEARCH_CLIENT_CERT_FILEPATH: elasticsearch.crt

EF_OUTPUT_ELASTICSEARCH_CLIENT_KEY_FILEPATH: elasticsearch.key

EF_OUTPUT_ELASTICSEARCH_ECS_ENABLE: “true”

EF_OUTPUT_ELASTICSEARCH_ENABLE: “true”

EF_OUTPUT_ELASTICSEARCH_INDEX_PERIOD: rollover

EF_OUTPUT_ELASTICSEARCH_INDEX_TEMPLATE_CODEC: best_compression

EF_OUTPUT_ELASTICSEARCH_INDEX_TEMPLATE_ENABLE: “true”

EF_OUTPUT_ELASTICSEARCH_INDEX_TEMPLATE_ILM_LIFECYCLE: elastiflow

EF_OUTPUT_ELASTICSEARCH_INDEX_TEMPLATE_REFRESH_INTERVAL: 20s

EF_OUTPUT_ELASTICSEARCH_INDEX_TEMPLATE_REPLICAS: 0

EF_OUTPUT_ELASTICSEARCH_INDEX_TEMPLATE_SHARDS: 3

EF_OUTPUT_ELASTICSEARCH_MAX_RETRIES: 1

EF_OUTPUT_ELASTICSEARCH_PASSWORD: _k9qx00x26BemjcEu7IX

EF_OUTPUT_ELASTICSEARCH_RETRY_BACKOFF: 1000

EF_OUTPUT_ELASTICSEARCH_RETRY_ENABLE: “true”

EF_OUTPUT_ELASTICSEARCH_STORAGE_OPTIMIZATION_ENABLE: “true”

EF_OUTPUT_ELASTICSEARCH_TIMESTAMP_SOURCE: collect

EF_OUTPUT_ELASTICSEARCH_TLS_CA_CERT_FILEPATH: “/etc/elasticsearch/certs/ca.crt”

EF_OUTPUT_ELASTICSEARCH_TLS_ENABLE: “true”

EF_OUTPUT_ELASTICSEARCH_TSDS_ENABLE: “true”

EF_OUTPUT_ELASTICSEARCH_USERNAME: elastic

Mikrotik routerOS 7 config:

/ip traffic-flow target print

Columns: SRC-ADDRESS, DST-ADDRESS, PORT, VERSION

SRC-ADDRESS DST-ADDRESS PORT VERSION

0 Mikrotik ELK_IP 4739 ipfix

/ip traffic-flow print

enabled: yes

interfaces: all

cache-entries: 32k

active-flow-timeout: 1m

inactive-flow-timeout: 15s

packet-sampling: no

sampling-interval: 0

sampling-space: 0

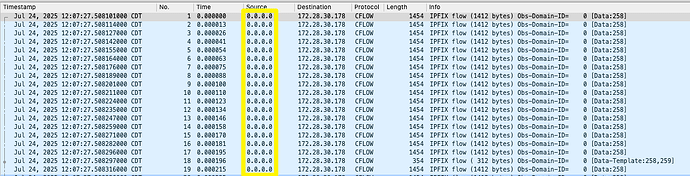

Tcpdump from server is:

tcpdump -n -i any udp port 4739:

17:46:21.636100 ens33 In IP Mikrotik.4739 > ELK_IP.4739: UDP, length 1412

101 packets captured

103 packets received by filter

0 packets dropped by kernel

So I know the 2 are connected, however I don’t have logs or data.

Flowcoll.service is running, seen in log:

flowcoll.elasticsearch_output[default] …

healthcheck success; connection is available {“address”: “127.0.0.1:9200”}

request succeeded {“address”: “127.0.0.1:9200”}

I have imported ECS kibana data, not codex. My user Flow_read has “monitor” under cluster privilege and “read, view_index_metadata, create_index, write” under Index privileges, with “elastiflow-flow-ecs-, elastiflow-path-esc- and elastiflow-telemetry_flow-esc-*”, but no logs or any data whatsoever.

When I started elastiflow I did got 2 new indices, “elastiflow-path-ecs-8.0-2.5-rollover-000001” and “elastiflow-telemetry_flow-ecs-8.0-2.5-rollover-000001” both with 0 Documents count and 747b of size.

If I’m missing some config file do tell.

Obviously I’m missing something so if anybody can point me in right direction I would be most grateful.

Cheers